Use OpenELB in BGP Mode

This document demonstrates how to use OpenELB in BGP mode to expose a Service backed by two Pods. The BgpConf, BgpPeer, Eip, Deployment and Service described in this document are examples only and you need to customize the commands and YAML configurations based on your requirements.

Instead of using a real router, this document uses a Linux server with BIRD to simulate a router so that users without a real router can also use OpenELB in BGP mode for tests.

Prerequisites

- You need to prepare a Kubernetes cluster where OpenELB has been installed.

- You need to prepare a Linux server that communicates with the Kubernetes cluster properly. BIRD will be installed on the server to simulate a BGP router.

- If you use a real router instead of BIRD, the router must support BGP and Equal-Cost Multi-Path (ECMP) routing. In addition, the router must also support receiving multiple equivalent routes from the same neighbor.

This document uses the following devices as an example:

| Device Name | IP Address | Description |

|---|---|---|

| master1 | 192.168.0.2 | Kubernetes cluster master, where OpenELB is installed. |

| worker-p001 | 192.168.0.3 | Kubernetes cluster worker 1 |

| worker-p002 | 192.168.0.4 | Kubernetes cluster worker 2 |

| i-f3fozos0 | 192.168.0.5 | BIRD machine, where BIRD will be installed to simulate a BGP router. |

Step 1: Install and Configure BIRD

If you use a real router, you can skip this step and perform configuration on the router instead.

-

Log in to the BIRD machine and run the following commands to install BIRD:

sudo add-apt-repository ppa:cz.nic-labs/bird sudo apt-get update sudo apt-get install bird sudo systemctl enable birdNOTE

- BIRD 1.5 does not support ECMP. To use all features of OpenELB, you are advised to install BIRD 1.6 or later.

- The preceding commands apply only to Debian-based OSs such as Debian and Ubuntu. On Red Hat-based OSs such as RHEL and CentOS, use yum instead.

- You can also install BIRD according to the official BIRD documentation.

-

Run the following command to edit the BIRD configuration file:

vi /etc/bird/bird.conf -

Configure the BIRD configuration file as follows:

router id 192.168.0.5; protocol kernel { scan time 60; import none; export all; merge paths on; } protocol device { scan time 60; } protocol bgp neighbor1 { local as 50001; neighbor 192.168.0.2 port 17900 as 50000; source address 192.168.0.5; import all; export all; enable route refresh off; add paths on; }NOTE

-

For test usage, you only need to customize the following fields in the preceding configuration:

router id: Router ID of the BIRD machine, which is usually set to the IP address of the BIRD machine.protocol bgp neighbor1:local as: ASN of the BIRD machine, which must be different from the ASN of the Kubernetes cluster.neighbor: Master node IP address, BGP port number, and ASN of the Kubernetes cluster. Use port17900instead of the default BGP port179to avoid conflicts with other BGP components in the system.source address: IP address of the BIRD machine.

-

If multiple nodes in the Kubernetes are used as BGP neighbors, you need to configure multiple BGP neighbors in the BIRD configuration file.

-

For details about the BIRD configuration file, see the official BIRD documentation.

-

-

Run the following command to restart BIRD:

sudo systemctl restart bird -

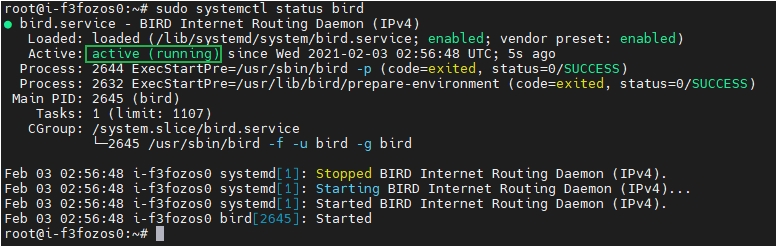

Run the following command to check whether the status of BIRD is active:

sudo systemctl status bird

NOTE

If the status of BIRD is not active, you can run the following command to check the error logs:

journalctl -f -u bird

Step 2: Create a BgpConf Object

The BgpConf object is used to configure the local (Kubernetes cluster) BGP properties on OpenELB.

-

Run the following command to create a YAML file for the BgpConf object:

vi bgp-conf.yaml -

Add the following information to the YAML file:

apiVersion: network.kubesphere.io/v1alpha2 kind: BgpConf metadata: name: default spec: as: 50000 listenPort: 17900 routerId: 192.168.0.2NOTE

For details about the fields in the BgpConf YAML configuration, see Configure Local BGP Properties Using BgpConf. -

Run the following command to create the BgpConf object:

kubectl apply -f bgp-conf.yaml

Step 3: Create a BgpPeer Object

The BgpPeer object is used to configure the peer (BIRD machine) BGP properties on OpenELB.

-

Run the following command to create a YAML file for the BgpPeer object:

vi bgp-peer.yaml -

Add the following information to the YAML file:

apiVersion: network.kubesphere.io/v1alpha2 kind: BgpPeer metadata: name: bgp-peer spec: conf: peerAs: 50001 neighborAddress: 192.168.0.5NOTE

For details about the fields in the BgpPeer YAML configuration, see Configure Peer BGP Properties Using BgpPeer. -

Run the following command to create the BgpPeer object:

kubectl apply -f bgp-peer.yaml

Step 4: Create an Eip Object

The Eip object functions as an IP address pool for OpenELB.

-

Run the following command to create a YAML file for the Eip object:

vi bgp-eip.yaml -

Add the following information to the YAML file:

apiVersion: network.kubesphere.io/v1alpha2 kind: Eip metadata: name: bgp-eip spec: address: 172.22.0.2-172.22.0.10NOTE

The address can be any random but valid IP address representation (one or more), execpt the IP addresses already in use. For details about the fields in the Eip YAML configuration, see Configure IP Address Pools Using Eip. -

Run the following command to create the Eip object:

kubectl apply -f bgp-eip.yaml

Step 5: Create a Deployment

The following creates a Deployment of two Pods using the luksa/kubia image. Each Pod returns its own Pod name to external requests.

-

Run the following command to create a YAML file for the Deployment:

vi bgp-openelb.yaml -

Add the following information to the YAML file:

apiVersion: apps/v1 kind: Deployment metadata: name: bgp-openelb spec: replicas: 2 selector: matchLabels: app: bgp-openelb template: metadata: labels: app: bgp-openelb spec: containers: - image: luksa/kubia name: kubia ports: - containerPort: 8080 -

Run the following command to create the Deployment:

kubectl apply -f bgp-openelb.yaml

Step 6: Create a Service

-

Run the following command to create a YAML file for the Service:

vi bgp-svc.yaml -

Add the following information to the YAML file:

kind: Service apiVersion: v1 metadata: name: bgp-svc annotations: lb.kubesphere.io/v1alpha1: openelb # For versions below 0.6.0, you also need to specify the protocol # protocol.openelb.kubesphere.io/v1alpha1: bgp eip.openelb.kubesphere.io/v1alpha2: bgp-eip spec: selector: app: bgp-openelb type: LoadBalancer ports: - name: http port: 80 targetPort: 8080 externalTrafficPolicy: ClusterNOTE

- You must set

spec:typetoLoadBalancer. - The

lb.kubesphere.io/v1alpha1: openelbannotation specifies that the Service uses OpenELB. - The

protocol.openelb.kubesphere.io/v1alpha1: bgpannotation specifies that OpenELB is used in BGP mode. Deprecated after 0.6.0. - The

eip.openelb.kubesphere.io/v1alpha2: bgp-eipannotation specifies the Eip object used by OpenELB. If this annotation is not configured, OpenELB automatically selects an available Eip object. Alternatively, you can remove this annotation and use thespec:loadBalancerIPfield (e.g.,spec:loadBalancerIP: 172.22.0.2) or add the annotationeip.openelb.kubesphere.io/v1alpha1: 172.22.0.2to assign a specific IP address to the Service. When you setspec:loadBalancerIPof multiple Services to the same value for IP address sharing (the Services are distinguished by different Service ports). In this case, you must setspec:ports:portto different values andspec:externalTrafficPolicytoClusterfor the Services. For more details about IPAM, see openelb ip address assignment. - If

spec:externalTrafficPolicyis set toCluster(default value), OpenELB uses all Kubernetes cluster nodes as the next hops destined for the Service. - If

spec:externalTrafficPolicyis set toLocal, OpenELB uses only Kubernetes cluster nodes that contain Pods as the next hops destined for the Service.

- You must set

-

Run the following command to create the Service:

kubectl apply -f bgp-svc.yaml

Step 7: Verify OpenELB in BGP Mode

The following verifies whether OpenELB functions properly.

-

In the Kubernetes cluster, run the following command to obtain the external IP address of the Service:

root@master1:~# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 20h bgp-svc LoadBalancer 10.233.15.12 172.22.0.2 80:32278/TCP 6m9s -

In the Kubernetes cluster, run the following command to obtain the IP addresses of the cluster nodes:

root@master1:~# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master1 Ready master 20h v1.17.9 192.168.0.2 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.11 worker-p001 Ready worker 20h v1.17.9 192.168.0.3 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.11 worker-p002 Ready worker 20h v1.17.9 192.168.0.4 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.11 -

On the BIRD machine, run the following command to check the routing table. If equivalent routes using the Kubernetes cluster nodes as next hops destined for the Service are displayed, OpenELB functions properly.

ip routeIf

spec:externalTrafficPolicyin the Service YAML configuration is set toCluster, all Kubernetes cluster nodes are used as the next hops.default via 192.168.0.1 dev eth0 172.22.0.2 proto bird metric 64 nexthop via 192.168.0.2 dev eth0 weight 1 nexthop via 192.168.0.3 dev eth0 weight 1 nexthop via 192.168.0.4 dev eth0 weight 1 192.168.0.0/24 dev eth0 proto kernel scope link src 192.168.0.5If

spec:externalTrafficPolicyin the Service YAML configuration is set toLocal, only Kubernetes cluster nodes that contain Pods are used as the next hops.default via 192.168.0.1 dev eth0 172.22.0.2 proto bird metric 64 nexthop via 192.168.0.3 dev eth0 weight 1 nexthop via 192.168.0.4 dev eth0 weight 1 192.168.0.0/24 dev eth0 proto kernel scope link src 192.168.0.5 -

On the BIRD machine, run the

curlcommand to access the Service:root@i-f3fozos0:~# curl 172.22.0.2 You've hit bgp-openelb-648bcf8d7c-86l8k root@i-f3fozos0:~# curl 172.22.0.2 You've hit bgp-openelb-648bcf8d7c-pngxj

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.